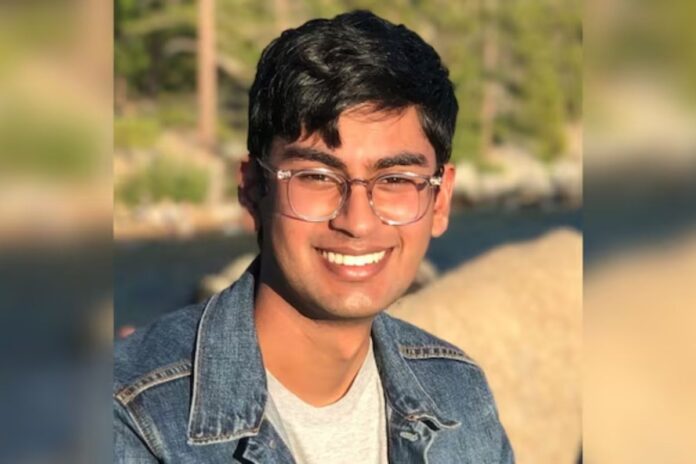

Suchir Balaji, a 26-year-old former researcher at artificial intelligence giant OpenAI and a prominent whistleblower, was found dead in his San Francisco apartment on November 26. Reports indicate that his death is being investigated as a suicide, with no evidence of foul play discovered so far.

The San Francisco Police Department responded to a wellness check requested by Balaji’s friends and colleagues, who had grown concerned about his safety. Upon entering the apartment, officers discovered Balaji’s body. “Officers and medics arrived on scene and located a deceased adult male from what appeared to be a suicide. No evidence of foul play was found during the initial investigation,” the San Francisco Chronicle quoted from the police statement. The city’s chief medical examiner later confirmed the cause of death as suicide.

Balaji’s passing has sent shockwaves through the tech community and beyond, with reactions pouring in on social media. Tesla CEO Elon Musk responded with a cryptic “hmm” on X, the platform formerly known as Twitter, offering no further elaboration.

Balaji, who left OpenAI in August, had publicly accused the company of engaging in unethical practices, including the unauthorized use of copyrighted material to train its flagship product, ChatGPT. His allegations became a focal point for several lawsuits against OpenAI, filed by authors, programmers, and journalists who claim their copyrighted works were exploited without permission.

In an interview with The New York Times shortly after his resignation, Balaji shared his concerns about OpenAI’s operations. “If you believe what I believe, you have to just leave the company,” he stated. He argued that technologies like ChatGPT were not only undermining copyright laws but also damaging the broader internet ecosystem by using data without proper consent.

On October 24, Balaji shared his final post on X, shedding further light on his concerns about generative AI and copyright laws. “I recently participated in a NYT story about fair use and generative AI, and why I’m sceptical ‘fair use’ would be a plausible defence for a lot of generative AI products,” he wrote. He elaborated on his doubts about the legal defence of “fair use,” stating that generative AI products often create substitutes that directly compete with the data they were trained on.

Balaji’s accusations gained traction as a rallying point for critics of generative AI, amplifying legal and ethical debates about the technology. His outspoken stance also earned him both admiration and criticism within the AI community. While some hailed him as a principled whistleblower, others questioned his motives and timing.

The lawsuits against OpenAI, fueled in part by Balaji’s revelations, continue to challenge the legality of using copyrighted material in AI training. His death has left many wondering whether the pressure and scrutiny he faced contributed to his tragic end.

For now, Balaji’s passing leaves behind a complex legacy—one of a researcher whose voice played a critical role in shaping the discourse around ethical AI but whose life ended abruptly and under tragic circumstances. His concerns about the unchecked growth of generative AI remain part of an ongoing conversation about the future of technology and its impact on society.