In a groundbreaking advancement in robotic surgery, researchers at Johns Hopkins University (JHU) have developed a new imitation learning technique enabling a surgical robot to perform complex medical procedures with precision close to that of human surgeons. The Da Vinci Surgical System, already established as a leading tool for minimally invasive surgeries, now utilizes an artificial intelligence approach that allows it to learn by watching videos of actual surgeries rather than requiring direct programming of each surgical move.

This innovation, presented at the prestigious Conference on Robot Learning in Munich, Germany, represents a significant leap toward autonomous robotic surgery, where robots could potentially operate without the need for real-time human input.

Robotic Surgery Reaches New Level of Autonomy.

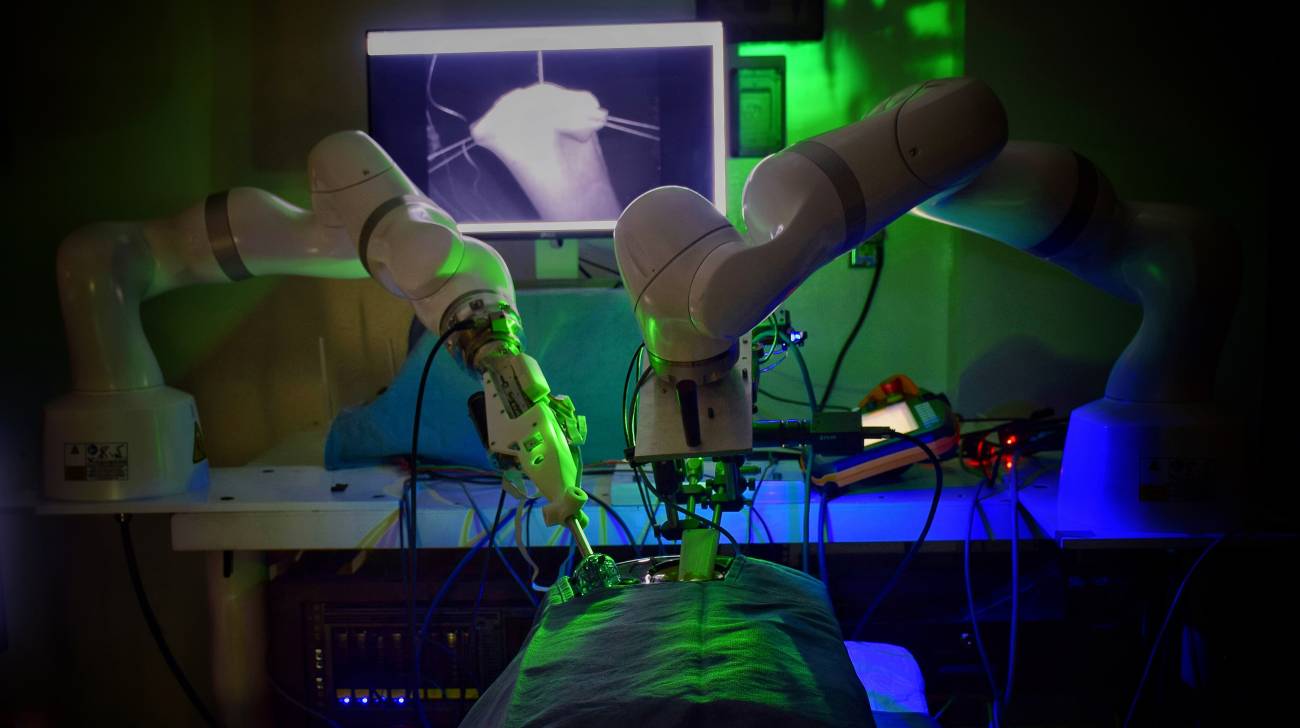

The Da Vinci Surgical System, widely used in hospitals around the world, allows surgeons to perform minimally invasive procedures through small or no incisions by replicating a surgeon’s hand movements with precision. Equipped with multiple arms controlled via a console, the system includes a 3D high-definition vision system, a robotic arm for camera management, and a lighting system for optimal visibility during procedures. Until now, the Da Vinci robot has depended heavily on human-controlled inputs, with surgeons directing its every movement in real time.

However, with JHU’s new imitation learning approach, this dependency is dramatically reduced. Rather than requiring step-by-step instructions for each task, the Da Vinci system can now learn essential surgical tasks by analyzing hundreds of videos captured by the wrist cameras embedded within its robotic arms. This approach allows the robot to internalize and replicate complex procedures—such as suturing, needle manipulation, and tissue handling—with an accuracy that previously could only be achieved by highly trained human surgeons.

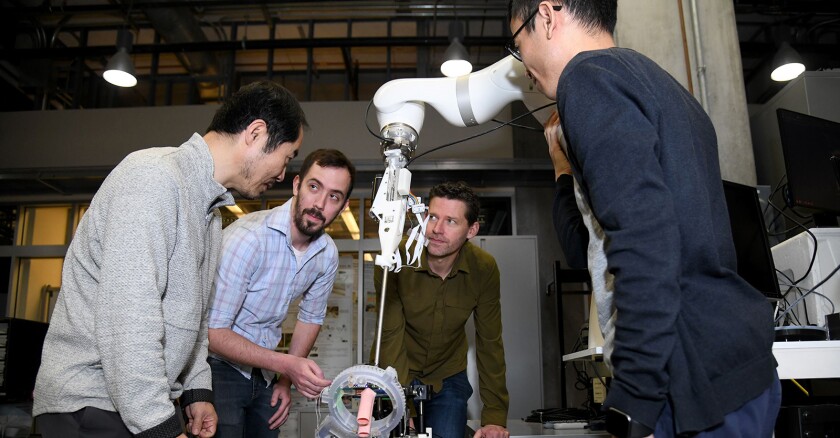

JHU’s team of researchers, who are leading experts in surgical robotics, are optimistic that their breakthrough will pave the way for autonomous surgeries in the near future. “This is an unprecedented milestone in imitation learning,” stated the lead researcher, who emphasized that the robot’s adaptability has surpassed initial expectations. During the testing phase, the Da Vinci robot even demonstrated the unexpected capability of autonomously retrieving dropped needles—a task it was not explicitly trained to perform. This level of adaptability highlights the potential of robotic systems to act independently in the operating room.

An AI Model to “Speak Surgery” Using Mathematical Movements.

The foundation of this breakthrough lies in the AI architecture developed by the JHU team, which functions similarly to models like ChatGPT but tailored specifically for surgical procedures. This architecture, dubbed by researchers as the “surgical language” model, teaches the Da Vinci robot to interpret and replicate intricate medical movements by converting them into mathematical representations. By “speaking surgery” in this way, the robot learns to perform tasks that are otherwise complex and dexterous.

This model’s ability to generalize across various tasks means it can rapidly learn new procedures, significantly reducing the traditional reliance on hand-coding each movement for a new surgery. With this capability, surgical robots could be trained on a wide variety of procedures and then transferred seamlessly between them with minimal reprogramming. The time and resources saved from this approach are substantial, as each surgery type would no longer need individualized programming.

The imitation learning process used for the Da Vinci robot has another advantage: it minimizes the potential for human error in robot programming. Instead of relying on human programmers to account for every potential movement or adjustment, the robot now learns directly from real-life examples, thereby providing a closer approximation of human expertise. This technology could not only expedite the training of new robots but also ensure a higher standard of precision across procedures.

Potential New Horizons for Autonomous Robot-Assisted Surgeries.

The success of imitation learning in the Da Vinci system is opening doors to a new era of autonomous robotic surgery, with a multitude of potential use cases. As the system becomes capable of adapting to new procedures simply by “watching” them, it could be deployed in remote areas with limited access to skilled surgeons or used in high-stakes surgical environments, such as space missions or disaster zones, where human intervention is limited or risky.

Medical experts are hopeful about the implications of JHU’s breakthrough, envisioning a future where robots perform routine surgeries without the need for human operators. This approach could help reduce wait times, improve access to quality surgical care, and alleviate some of the pressures faced by human surgeons, who often work in highly stressful environments. Additionally, robots trained in this way could assist with tasks that are physically taxing for human surgeons, such as lengthy surgeries that require extreme precision and endurance.

The prospect of autonomous surgical robots also raises important questions about safety and reliability, particularly in critical medical procedures. Rigorous testing, validation, and regulatory approval will be crucial before fully autonomous robots can be deployed in real-world settings. According to the JHU research team, future developments will likely focus on ensuring these robots can handle complex situations, such as unexpected complications or variations in patient anatomy.

Challenges and Future Outlook for Autonomous Robotic Surgery.

Despite the promising results, the path to full autonomy in surgical robotics is fraught with challenges. Surgical procedures are inherently unpredictable, and every patient’s physiology is unique, making it essential for robotic systems to adapt in real-time. JHU’s imitation learning model marks a substantial step toward this goal, yet more advances in sensor technology, AI algorithms, and real-time processing will be required to ensure that robots can respond effectively to unforeseen issues.

The JHU team’s research has garnered significant attention from the medical and robotics communities, who recognize the potential for autonomous robots to transform healthcare. At the Conference on Robot Learning, the JHU presentation received considerable acclaim, with industry leaders predicting that the approach could set new standards for robotic capabilities in medicine.